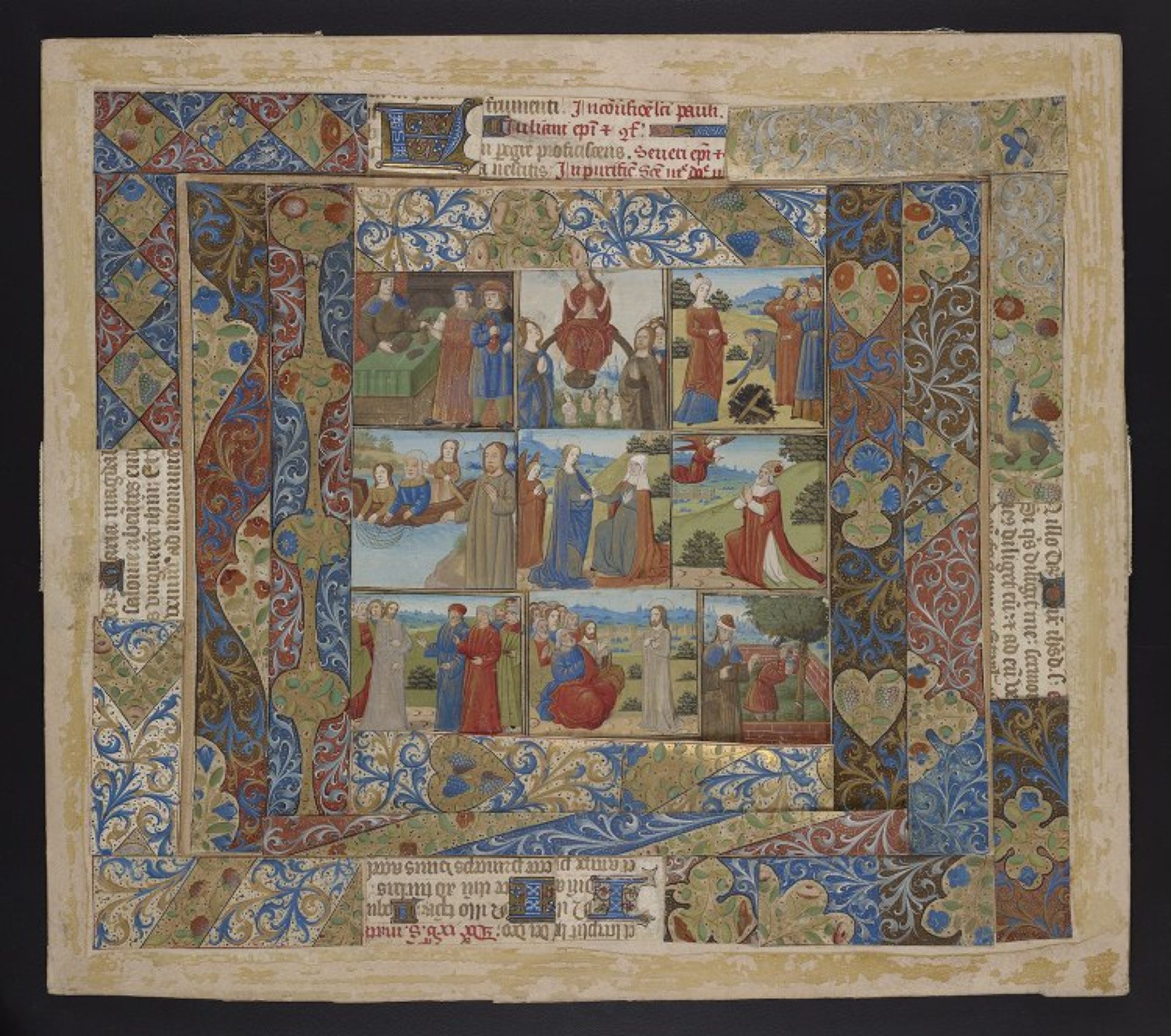

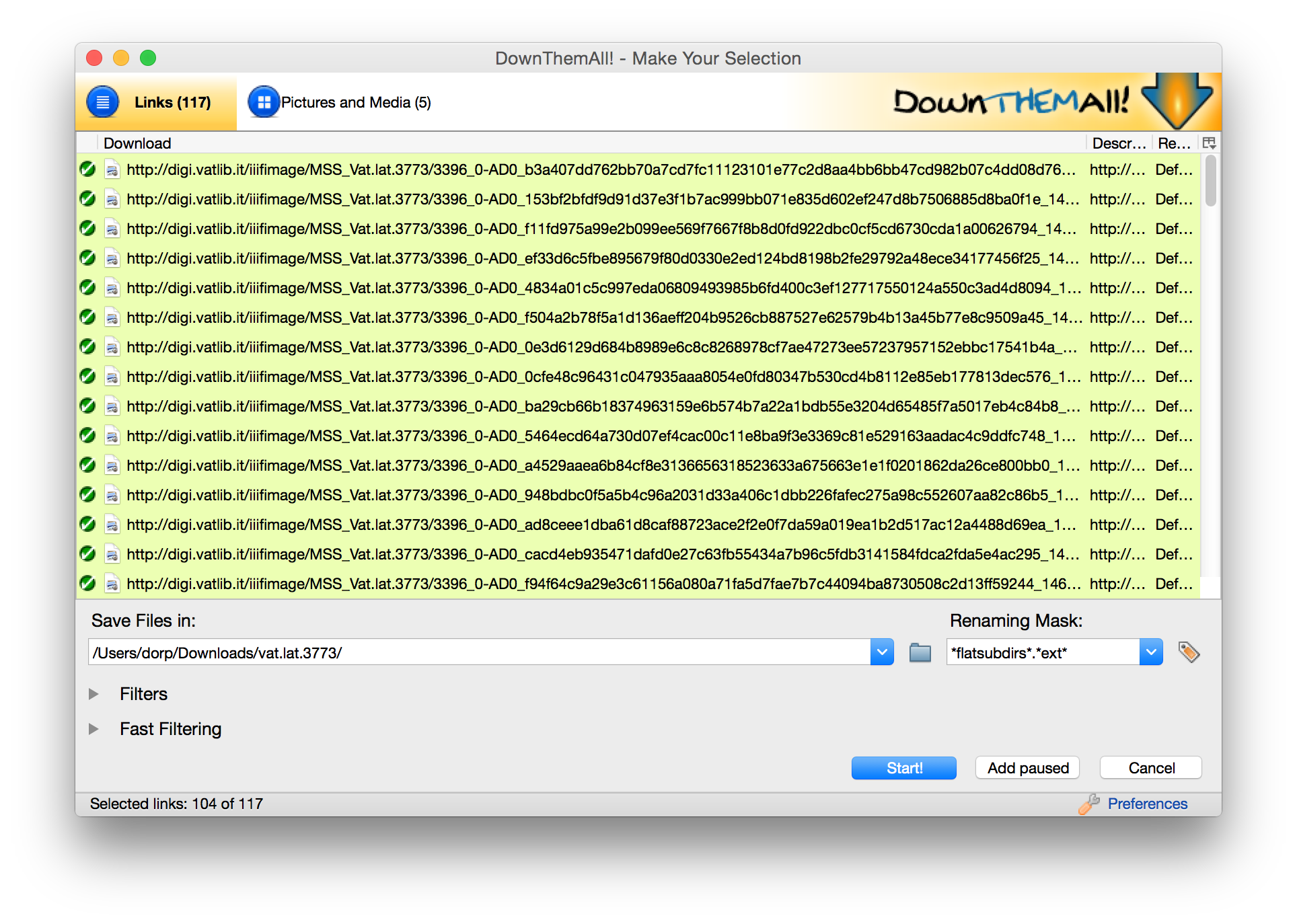

IIIF manifests are great, but what if you want to work with digital images outside of a IIIF interface? There are a few different ways I’ve figured out that I can use IIIF manifests to download all the images from a manuscript. The exact approach will vary since different institutions construct their image URLs in different ways. Here’s the first approach, which is fairly straightforward and uses e-codices as an example. Tomorrow I’ll post a second post using on the Vatican Digital Library. Please remember that most institutions license their images, so don’t repost or publish images unless the institution specifically allows this in their license.

Continue reading “How to download images using IIIF manifests, Part I: DownThemAll”Manuscript PDFs: Update

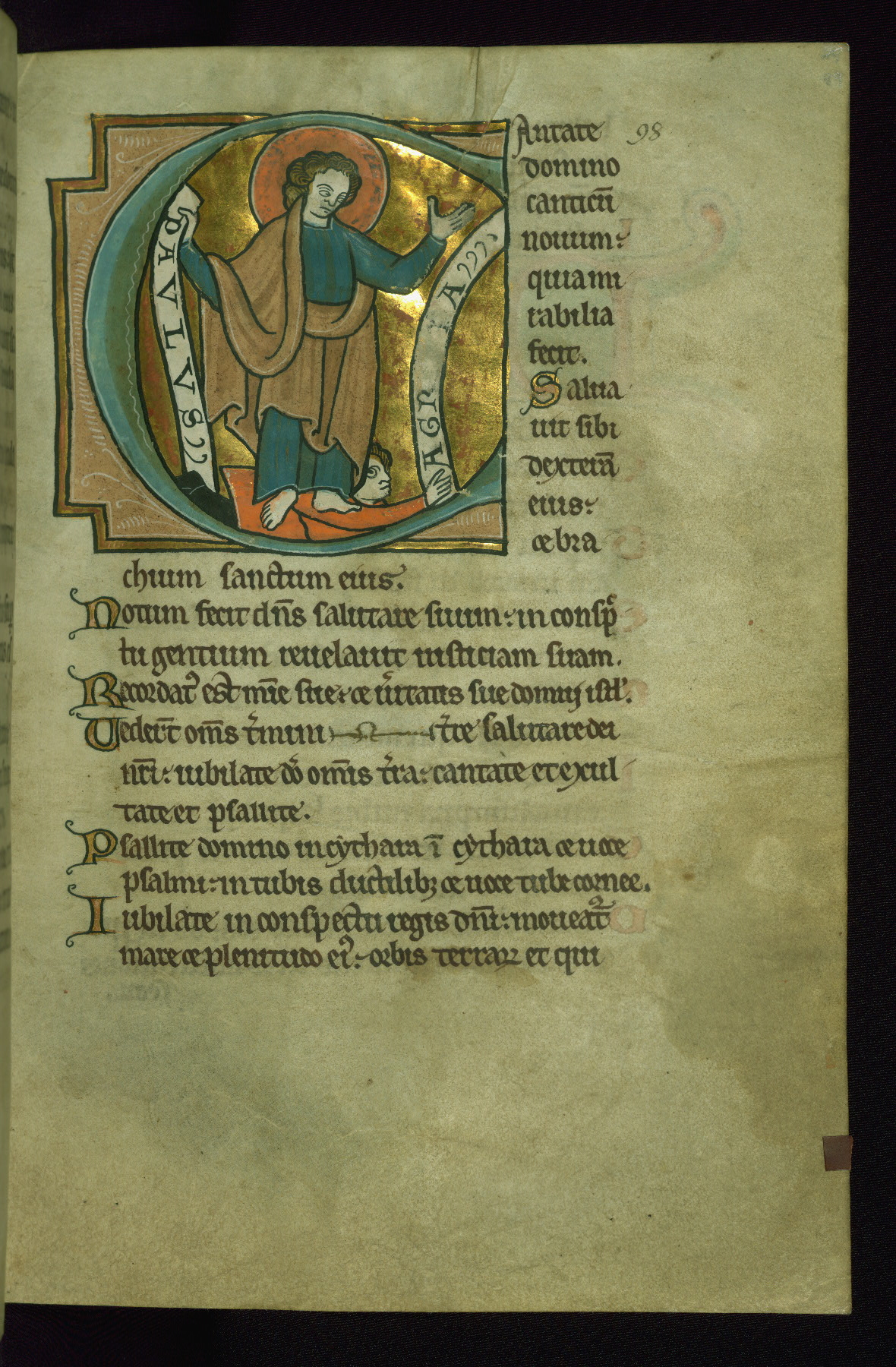

My last post was an announcement that I’d posted the University of Pennsylvania’s Schoenberg Collection manuscripts on Google Drive as PDF files, along with details on how I did it. This is a follow-up to announce that I’ve since added PDF files for UPenn’s Medieval and Renaissance Manuscript collection, AND for the Walters Art Museum manuscripts (which are available for download through The Digital Walters).

Continue reading “Manuscript PDFs: Update”UPenn’s Schoenberg Manuscripts, now in PDF

Hi everyone! It’s been almost a year since my last blog post (in which I promised to post more frequently, haha) so I guess it’s time for another one. I actually have something pretty interesting to report!

Continue reading “UPenn’s Schoenberg Manuscripts, now in PDF”It’s been a while since I rapped at ya

I’m not dead! I’m just really bad when it comes to blogging. I’m better at Facebook, and somewhat better at Twitter (and Twitter), and I do my best to update Tumblr.

Continue reading “It’s been a while since I rapped at ya”Disbinding Some Manuscripts, and Rebinding Some Others (presented at ICMS, Kalamazoo, MI, May 2014)

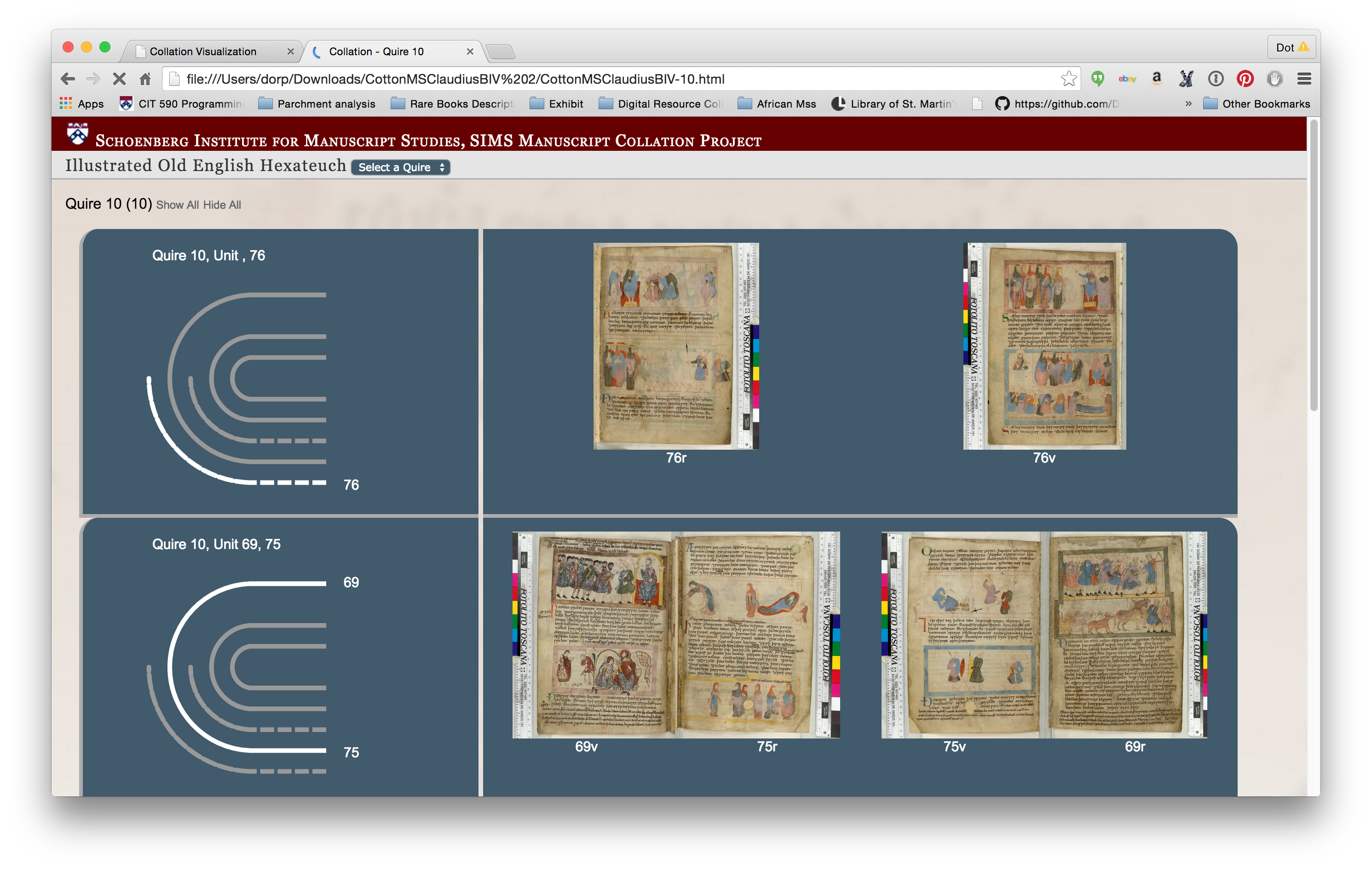

I presented my collaborative project on visualizing collation at the International Congress on Medieval Studies in Kalamazoo, Michigan, last week, and it was really well received. Also last week I discovered the Screen Recording function in QuickTime on my Mac. So, I thought it might be interesting to re-present the Kalamazoo talk in my office and record it so people who weren’t able to make the talk could still see what we are up to. I think this is longer than the original presentation – 23 minutes! – so feel free to skip around if it gets boring. Also there is no editing, so um ah um sorry about that. (Watch out for a noise at 18:16, I think my hand brushed the microphone, it’s unnerving if you’re not expecting it)

Continue reading “Disbinding Some Manuscripts, and Rebinding Some Others (presented at ICMS, Kalamazoo, MI, May 2014)”Day of DH 2014

Today was the Day of Digital Humanities 2014, and I spent it blogging on the DayofDH main site. My day was spent in a seminar on medieval manuscripts, which as it turns out is a really fitting way to spend this day.

The individual posts:

How to get MODS using the NYPL Digital Collections API

Last week I figured out how to batch-download MODS records from the NYPL Digital Collections API (http://api.repo.nypl.org/) using my limited set of technical skills, so I thought I would share my process here.

Continue reading “How to get MODS using the NYPL Digital Collections API”Q: How do you teach TEI in an hour?

A: You don’t! But you can provide a substantial introduction to the concept of the TEI, and explain how it functions.

On June 4 I participated in PhillyDH@Penn, a day of workshops and unconference sessions sponsored by PhillyDH and held in my own beloved Van Pelt-Dietrich Library on the University of Pennsylvania campus. I was sick, so I wasn’t able to participate fully, but I was able to lead a one-hour Introduction to TEI. I aimed it at absolute beginners, with the intention to a) Give the audience an idea of what TEI is and what it’s for (to help them answer the question, Is TEI really what I need?) and b) explain enough about the TEI so they will know a bit of something walking into their first “real” (multi-hour, hands-on) TEI workshop. I got a lot of good feedback, so hopefully it did its job. And I do hope to have the opportunity to follow this up with more substantial workshops.

Slides (in PDF format) are posted here.

EDIT: Need to add that these slides owe a ton to James Cummings, with whom I have taught TEI and to whom I owe much of what I know about it!

New Job

I have a new job! As of April 1, I am the Curator, Digital Research Services, Schoenberg Institute for Manuscript Studies, Special Collections Center, University of Pennsylvania.

Now say that five times fast.

In this role, I’m responsible for the digital initiatives coming out of SIMS. I’m also a curator for the Schoenberg Manuscript collection (as are all SIMS staffers in the SCC), and I manage the Vitale Special Collections Digital Media Lab (Vitale 2). It’s also clear that I will have some broad role in the digital humanities at UPenn, although that part of the job isn’t so clear to me yet. I’m the inaugural DRS Curator, so there is still a lot to be worked out although after three weeks on the job, I’m feeling really confident that things are under control.

I report to Will Noel, Director of the SCC and Director of SIMS. Will may be best known as the Project Director for the Archimedes Palimpsest Project at the Walters Art Museum. Will is awesome, and I’m thrilled to be working for him. I’m also thrilled to be working at the University of Pennsylvania, in a beautiful brand new space, in the great city of Philadelphia.

I plan to blog more in this new position, so keep your eyes here and on the MESA blog.

Medieval Electronic Scholarly Alliance

It’s not that I haven’t been doing anything; I just haven’t been doing anything here.

In June, Tim Stinson at NCSU and I were awarded a grant from the Andrew W. Mellon Foundation to implement the Medieval Electronic Scholarly Alliance, MESA, and since we started preparing metadata for indexing in, oh, about mid-September, all of my technical energy has been going into that work. MESA is a node of the Advanced Research Consortium (ARC), and follows the model of scholarly federation spearheaded by NINES and followed by 18thconnect, using Collex as its underlying software support.

What does it involve? It involves taking metadata already created by digital collections or projects (like, for example, the Walters Art Museum, Parker on the Web, or e-Codices), mapping their fields out to the Collex and MESA fields, and then generating RDF that follows to the Collex guidelines. It’s these three collections (and, just in the last week and with a ton of help from Lisa McAulay at UCLA, the St. Gall Project) that I’ve spent the most time on, and here are some key things I’ve learned so far that might be helpful for people considering adding their collections to MESA (or to any of the other ARC nodes, for that matter)… or for that matter, for anyone who is interested in sharing their data, any time ever.

- If your metadata is in an XML format, put unique identifiers on all the tags. Just do it.

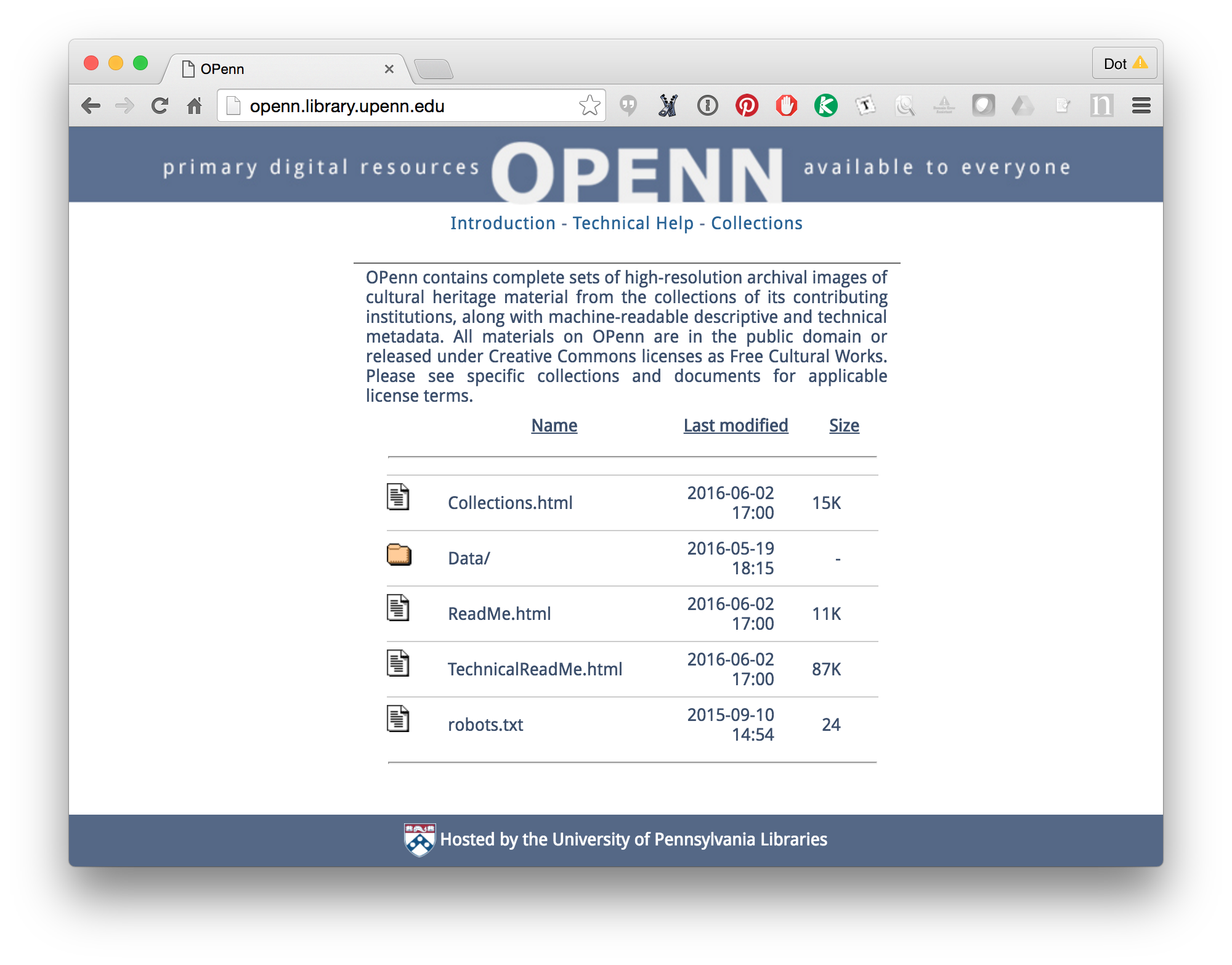

- Follow best practices for file and folder naming. In this case, best practice probably just means BE CONSISTENT. My favorite example for illustrating consistency is probably the Digital Walters at the Walters Art Museum. One of the reasons that it’s a great example is that their data (image files and metadata) has been released under Open Access licensing, with the intention that people (like me) will come along and grab them, and work with them. What this means is that everything was designed with this usage in mind, so it’s simple, and that all their practices are well-documented, so it’s easy to take them and run without having to figure a lot out. Their file and folder naming practices are documented here.

- Be thoughtful about how you format your dates. Yeah, dates are a big deal. The metadata of one of the projects (I won’t say which one) had dates formatted as, e.g., “xii early-xiii late (?)”, and with no ISO (or an other even slightly computer-readable) version in, e.g., an attribute value. I ended up mapping every individual date value to a computer-readable form. As always, I figure there was a better way to do it, but at the time… well, it took a while, but in the end it did what I needed it to do. If you are in the process of setting up a new project, consider including computer-readable dates in your metadata. Because Collex/MESA includes values for both date label and date value, you can include both a computer-readable date to be used for searching, and a human-readable version, which may have more nuance than can be included in a computer readable value. For example, LABEL: xii early-xiii late (?); VALUE: 800,900.

- Is whatever you are describing in English? Great! If it’s not, find someplace in your metadata to indicate what language it is in. Use a controlled vocabulary, such as the ISO 639-2 Language Code List, and as with dates it helps to include a computer-readable code in addition to a human-readable version. (This is less important for MESA, as we can map between the columns in the ISO 639-2 Language Code List, but it’s a good general rule to follow)

- You can actually do a lot with a little. In the past few weeks the metadata I’ve worked with range from really amazing full manuscript Descriptions (in TEI P5) from the Walters Art Museum to very simple and basic descriptions in Dublin Core from e-Codices. Although the full descriptions provide more information (helpful for full-text searching), the simple DC records, assuming they include the basic information you’d want for searching (title, date, language, maybe incipits) are just fine. So don’t let a lack of detail put you off from contributing to MESA!

I would also encourage you to release your data under an Open Access license (something from the Creative Commons), and to make your data easy to grab (either by posting everything online, as the Walters Art Museum has done, or by releasing it through an OAI provider, as e-Codices has done).