You are in the right place! I just changed my theme. I upgraded to the most recent version of the theme I’d been using, and I didn’t like some of the changes, so I decided to do something completely different. This one is a bit more sophisticated. I like it!

Almost ready to update

Doug and I have been working on the generalized BookReader javascript file, and I am almost ready to update all the Walters manuscripts. There is just one bug that is causing all the 1-up images to display teeny-tiny, instead of fitting to the height of the browser window. But once that is taken care of I’ll generate the new javascript files (I have an XSLT ready to go now) and get those up right quick.

The javascript has been updated to pull everything it needs to function from the TEI manuscript description file, except for the name of the msDesc file itself. That is the only bit that needs to be hard-coded into the javascript – everything else gets grabbed from that file. This includes:

- The manuscript’s id number (tei:idno) and siglum (which is the idno with the “.” removed – W.4 becomes W4). Both of these are needed at several different points in the javascript. In an earlier post I was pleased with myself for figuring out how to grab the siglum from the name of the TEI file, which takes a few steps and is a bit complicated. I’m glad that I figured out how to do that, but using the idno for this purpose is much more elegant.

- The number of leaves in the manuscript. This is required by the BookReader. In the context of the Walters collection, it doesn’t make sense to just grab the number of <surface> elements in <facsimile> (which is what I did the first time), because there are images of the fore-edge, tail, spine, head, and in some cases where the bindings have flaps, an image of the flap closed. We removed those images from the group (although important, they would not make sense within the context of a page-turning version of the manuscript) and then counted the number of images remaining.

- The title of the manuscript: title[@type=’common’]. The Walters manuscripts have several different titles, but for the purpose of the BookReader – the title to display at the top of the main window – the common title made the most sense.

- The height and width of the page images. The first version of the BookReader hard-coded these numbers in, so the best I could do was to use measurements from an example page and hope that the other images in the manuscript weren’t too much different. However, I know that the images are frequently different sizes. So being able to pull the measurements for each individual page image is very useful.

- Finally, we were able to use the information about the language of the manuscript (textLang/@mainLang) to determine whether a manuscript should display right-to-left or left-to-right. Doug figured out how to modify the javascript to allow right-to-left page-turning, and I figured out how to grab that information from the TEI file. We were both pretty tickled about this one! Given that many, if not most, of the Digital Walters manuscripts are non-Western, having a right-to-left display is really important functionality.

So, updates to the manuscripts are coming very soon. Once they are up I will (finally) publish a post that explains the workflow in more detail and includes all the files and documentation one would need to create their own Digital Walters BookReaders, or to do the same kind of thing with their own materials.

New manuscript descriptions, and javascript

For the past couple of days I’ve been working with Doug Emery at Digital Walters on a few things that will greatly improve our little project!

1) Updating the TEI manuscript descriptions to include the height & width for each image file. If you’ve read previous posts on this blog, you know that the BookReader javascript file requires a height & width for the images in order to work correctly. My workflow has been, for each manuscript, open a representative image in Jeffrey’s Exif viewer (http://regex.info/exif.cgi), and take the measurements from that image and hard-coding it into the javascript file. This is time- and effort-intensive, and it makes my head hurt. Having the measurements easily available (like, encoded into the TEI files) means that we now have the option of pulling those measurements out and applying them programmatically. Which leads us to the next cool thing we’ve been doing…

2) Improving the BookReader simple javascript file with a more thoughtful use of code to pull as much as we can out of the metadata rather than hard-coding it. Ideally the same file could be used for each manuscript, but I don’t know if we’ll get to that point.[1] The main problem seems to be with the siglum – it’s not actually coded anywhere in the metadata. When I generated the javascript files that are running the manuscripts now I used XSLT to extract the siglum from the name of the TEI file, but I am having trouble doing something similar using javascript. Although, as I think about it, there’s nothing stopping me from just adding that to the TEI files myself. We’ve already added the measurements, so why not add this too? It would be a simple script and would take a few minutes, and it might just make the javascript file completely free from hard-coded information. Perhaps I’ll try that tomorrow!

Although I started out intending to do everything myself, I’m really glad to have picked up a partner to help me with the javascript (someone who also happens to be knowledgeable about the metadata and able to feed me additional metadata when needed!). I have learned a lot in the past week, and this is the first time I’ve done javascript and really understood how it’s working, and I feel confident applying it on my own. It’s pretty exciting.

[1] If you’ve read previous posts, you know that I do want to have self-contained sets of files for each mss, rather than running everything off of one javascript file controlled by a drop-down list of sigla or something like that. I think that it would be possible to do that, but it’s not really what I want. When I say “the same file used for each manuscript” I mean that identical files could be found running each manuscript. I hope this distinction makes sense.

More Walters Manuscripts online

I uploaded 71 more manuscripts this afternoon: see Walters Art Museum Manuscripts.

I spent some time on Skype with Doug Emery this afternoon. He figured out how to use jquery to pull in the TEI manuscript description (an XML file) and use that to get the correct order of the images! He showed me how he did it, so I should be able to figure out something similar in the future if I need to. He also showed me generally how to use javascript to reach into an XML file and grab an element value, a great trick and more versatile than XSLT. We had an interesting conversation about XML-brain (which is what I have) vs. programmer brain (which is what he has). I tend to use XSLT to do everything, since that’s what I know, and he will only use XSLT under duress (I’m paraphrasing and may be exaggerating). But it’s clear that javascript/jqery adds a lot to a toolkit, even if there is still much I can do with XSLT.

What I’d like eventually is a simple javascript file that can replace the current javascript files I’ve generated for each manuscript, one that can pull all of the information it needs straight from the metadata files rather than having it hard-coded into the javascript (which is how it is working now).

There are thee things currently hard-coded that will need to be grabbed:

- The URL for the image files

- The home URL for the book (where the data resides on the WAM server)

- height and width for the image files

The third I figured would be the toughest, because that information doesn’t currently exist anywhere other than in the image files themselves. Doug and I have a plan (his plan 🙂 ), so hopefully we’ll be able to get that in this week.

In the meantime, until we can get the javascript updated significantly, I’m going to go ahead and finish checking and uploading the existing manuscripts. Once the updated javascript is ready I’ll switch everything over to the new system.

This is fun!

Two steps forward and a big step back

First, the good news: I generated BookReaders for all the Walters manuscripts, and I’ve checked and uploaded some of them to this site (look for “Walters Art Museum Manuscripts” in the right menu, or go here: http://www.dotporterdigital.org/?page_id=20. Yesterday (after I figured out that there was a relative path that was slightly wrong, keeping anything from working) there was only one thing left to do: finding the height and width, in pixels, of all the page images. I was hoping to do something programmatic, but instead I have been using Jeffrey’s Exif Viewer (http://regex.info/exif.cgi) to find those numbers, and putting them into the simple js files by hand. It’s probably not ideal, and it takes a while, but it’s 1) simple (I like simple!) and 2) it offers another opportunity for quality control. I’ve found a few instances where the title pulled out of the XML contains a single quote, which messes up the javascript and keeps the book from displaying, so it’s a good check.

Now for the not-so-good news: While going through this exif-checking / quality control, I found an instance where the image files aren’t named in order. That is, page 1 is image 0001, page 2 is image 0007, page 3 is image 0003 … etc. In this instance there is no image 0002, so “page 2” shows as a blank, and “page7” displays what is actually page 2. I wrote to Will Noel, Mike Toth, and Doug Emery (Digital Walters’ brain trust) and it turns out that this is a fairly frequent occurrence. While the IA BookReader assumes that files are numbered consecutively (since that is how they design their projects), Walters provides ordering in the form of a TEI facsimile element in each manuscript description.

Long story short, I spent some time on Skype with Doug (very generously giving me his time on a Saturday afternoon!) and he recommended using jquery to build a web app that will build a list of fake file names, using the order from the facsimile element, and will associate each of those names with the file that matches the surface that the fake file name represents. I think that this will be more elegant than trying to modify the way that the BookReader works.

Now, I don’t know jquery! Doug pointed me to a tutorial, and I also have access to Lynda.com through my employer, so I expect I’ll spend some time this week getting up to speed on jquery. Doug is going to work on this in the meantime too.

I’ve placed a warning at the top of the Walters page, to let folks know that there may indeed be wonkiness in the BookReaders, but we are on the case! I’m going to go ahead and check and upload all the rest of the manuscripts, and hope that the jquery fix can be made programmatically across all the simple js files. Depending on how that goes I may go ahead with the planned Omeka catalog (the issue with page ordering in the BookReader shouldn’t really have an impact on that work) or I may wait. Stay tuned!

Progress!

I got in a good few hours of work this evening, and I really have some things to show for it.

I’m almost done with the stylesheet to generate the javascript BookReader files. Not having used XSLT to generate a javascript file before I wasn’t sure it would work, but it sure did. I had to set a bunch of variables, one after the other, to do things like extract the ms siglum from the file name and extract the number of pages from the last graphic tag in the facsimile section. That last one I’m particularly pleased with:

<!-- extract the number of page images from the last graphic in the facsimile section --> <xsl:variable name="url"> <xsl:for-each select="tei:TEI/tei:facsimile/tei:surface/tei:graphic"> <xsl:if test="position()=last()"> <xsl:value-of select="@url"/> </xsl:if> </xsl:for-each> </xsl:variable> <xsl:variable name="replace-thumb" select="replace($url,'thumb','')"/> <xsl:variable name="replace-siglum" select="replace($replace-thumb,$siglum,'')"/> <xsl:variable name="replace-underscore" select="replace($replace-siglum,'_','')"/> <xsl:variable name="replace-jpg" select="replace($replace-underscore,'.jpg','')"/> <xsl:variable name="replace-slash" select="replace($replace-jpg,'/','')"/> <xsl:variable name="page-nos" select="number($replace-slash)"/>

I expect there is a more sophisticated way to do this, but I’m just tickled that I figured it out myself.

I made good use of those variables, too, in fact every replacement I made in the javascript was done with a variable. I don’t think I’ve ever made a stylesheet constructed entirely of variables before.

The stylesheet generates a folder, named after the siglum, and the (much simpler) stylesheet I wrote for the html file does the same, and also uses the same variables (although not as many of them).

There is still work to do. For one thing, it’s not working! It’s something in the javascript, and since javascript isn’t my forte it’s going to take me a while to figure it out. I’ll probably spend some time this weekend modifying my test BookReader (the one that works) by hand, in comparison with the XSLT output, and see if I can get it to break or if I can see what’s different in the output that would cause it to break.

In any case I feel really good about what I’ve been able to get through tonight, and now I am READY FOR BED.

More thoughts on the plan

Yesterday I set up my hosting and installed some tools that I’m going to use to start my project. I also explained a bit about the project, just my initial thoughts.

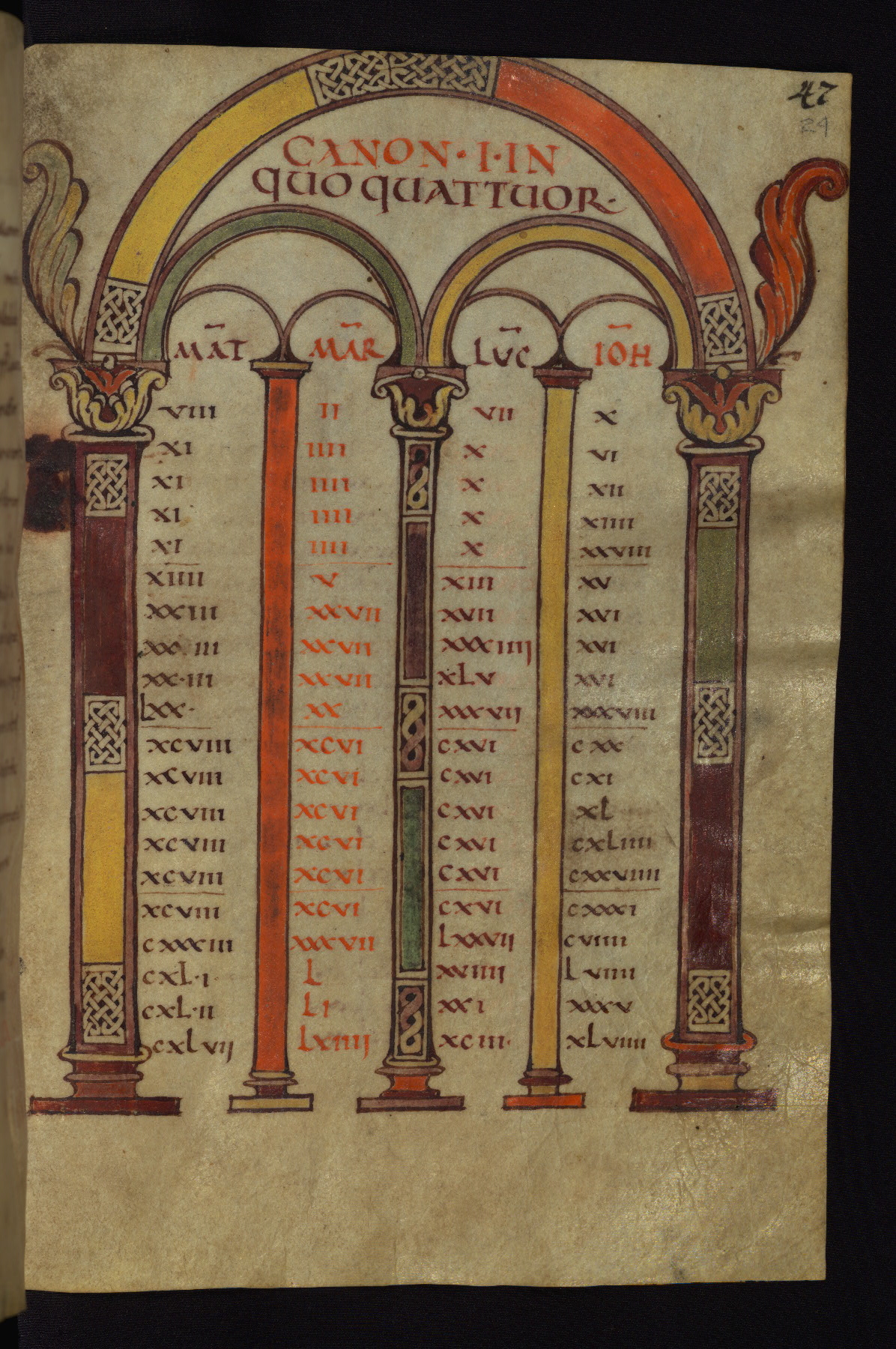

Today I did a bit of looking at code, and thinking in more detail about what I want to get out of the first stage of the project: creating Internet Archive BookReader objects for every manuscript digitized by the Walters Art Museum (http://www.thedigitalwalters.org/).

I’ve selected the IA BookReader (http://openlibrary.org/dev/docs/bookreader) for this project because it’s a very simple bit of code and it presents an attractive and functional finished product. I’m using the Walters Art Museum data because it’s been released under a Creative Commons license and because the Curator of Manuscripts, Will Noel, has been vocal about inviting people to take and reuse the data – so that is what I’m going to do.

Yesterday I selected one of the Walters manuscripts and created a BookReader for it – you can access it by linking the image on this page. It’s certainly not perfect, notably there is no way to navigate using page numbers, and I think foliation would be more problematic than pagination to figure out. Anyway, that’s something that I may tackle later on. But for now I’m pretty happy with it.

What do I want the finished product to look like? I can think of a few different ways I could work this, but the file structure I’ve decided on is to have one folder for each manuscript, named according to the manuscript’s siglum, containing all the files that will be required to drive the app. I have also considered having just those files that are specific per manuscript in those files (the index.html file and the BookReader Simple javascript file), and storing the general files elsewhere on the file system, pointing to them from the html and simple javascript file using URLs (so someone could download the folder for one manuscript and still run it), however that would mean that in order to run the page turner, the user’s machine would need to be online. So I think it makes more sense to package everything together, although it’s not the most efficient approach it has the advantage of making each manuscript completely accessible and usable outside of the context of the collection.

What do I need to do to get from here to there?

THERE. What is there? There I see an index.html file, and in order to specify that html file for this manuscript, I will need to put in the manuscript’s name in two places. Over there I see a javascript file. It needs quite a bit more information specified for this manuscript:

- width of the page image in pixels

- height of the page image in pixels

- ms siglum – in a couple of different places

- number of page images

- manuscript name

HERE. What do I have here? I have a TEI manuscript description! It is a beautiful thing. The TEI ms desc gives me much of the information I need over there.

- the siglum, bless its heart, isn’t noted on its own anywhere in the XML, although it is part of all the graphic@url that are in the facsimile section. Happily each of the ms desc files is named according to the siglum, so I should be able to extract it from the file name.

- the name of the manuscript can be extracted from TEI/teiHeader/fileDesc/titleStmt/title@type=’common’. Many of the manuscripts have additional titles, but for this exercise I’ll just be taking the common one.

- the number of pages is a bit more difficult. I think the easiest way to do this will be to extract the number from the last TEI/facsimile/surface/graphic/@url in the document, where the number is part of that URL value.

- width and height of the page image in pixels – I have no idea. The BookReader really depends on these numbers being there, if you leave them out the reader will load the image in actual size, which looks awful (and breaks the tile view), and if you don’t get the width to height ratio correct it will skew the images. So they really need to be exact. Of course the same measurement is applied to every page in the manuscript, and that only works as long as you don’t have fold-outs or anything like that (more common in printed books, but not completely unheard of in medieval manuscripts) – so for some documents the BookReader just won’t be usable. ANYWAY, I think these numbers will need to be extracted from files. I haven’t done that before, but I know it can be done. I just need to figure out how to do it.

So, this is my plan. Tomorrow and over the weekend I will start playing around with code to see what I can get easily and what will need more investigation. For now, I sleep.

The plan. I have a plan?

I spent this evening setting up the hosting (via Dreamhost), installing Omeka, MediaWiki, and WordPress, moving the few page-turning books I set up yesterday (using the Internet Archive BookReader) over to the sandbox, installing a bunch of Omeka plugins, and thinking about what exactly it is I want to do first.

Someone very smart told me just the other day that the best way to learn new programming skills is to have a project in mind, and to work through that project. So I think that’s what I’m going to do, and I have an idea of what I want my first project to be. It’s a doozie 🙂

The Walters Art Museum, with great foresight (mainly the foresight of Will Noel, Curator of Manuscripts) has released all of their digitized manuscripts, and many TEI manuscript descriptions along with them, under a Creative Commons license. The metadata is regular and standard, and the naming conventions for the scanned images are regular as well. So if one wishes to do something with the digitized collections, one could write up some scripts to grab the information one required (metadata and/or image files) and do it all in one go. Theoretically. And that is what I am going to do.

At this point my plan has two stages.

The first stage is to create Internet Archive BookReader versions for all of the scanned manuscripts from the Walters collection. The BookReader is super simple and there are only a few changes that would need to be made to the code for each version: Changing the book title (manuscript name) in a few places in the index.html file, and changing the URL pattern, manuscript name, and “home URL” (which would point to the Walters website) in the BookReader.js file. Honestly, I’m not entirely sure how I am going to do this, but I know it can be done. So I’ll figure it out.

The second stage is to create records for all these BookReader versions in Omeka. Now, the manuscript descriptions for the Walters manuscripts are extensive and good, but there is no place for descriptive metadata in the BookReader itself. On the other hand, Omeka doesn’t do well with multi-page documents. There’s no page-turning interface (I didn’t even see a plug-in for one), each individual page is presented separately, which isn’t really a good way to interact with codex manuscripts. My plan is to create a CSV file of metadata extracted from the Walters manuscript descriptions, import that into Omeka, and then provide links from those records to the BookReader versions, where the manuscripts can be interacted with a bit more naturally. (I’m actually following the model that we used at Indiana University Bloomington in developing the War of 1812 in the Collections of the Lilly Library project, which also uses Omeka to describe books and other objects but doesn’t display them through Omeka, instead it links out to our purpose-built publication services: http://collections.libraries.iub.edu/warof1812/) Now, how exactly am I going to create that CSV file? Again, I’m not sure yet, but I know that I can do it.

That’s it for tonight. Tomorrow I’m going to try to figure out what I’m going to have to do to start on stage one. Right now I’m thinking some kind of script to grab all the manuscript names + sigla (it’s the sigla that are different in the page URL from manuscript to manuscript), put them in some kind of (probably XML) document, then a separate script (probably XSLT, since that’s what I know best) to generate the BookReader files for each title. Any suggestions?