Following are my remarks from the Collections as Data National Forum 2 event held at the University of New Mexico, Las Vegas, on May 7 2018. Collections as Data is an Institute of Museum and Library Services supported effort that aims to foster a strategic approach to developing, describing, providing access to, and encouraging reuse of collections that support computationally-driven research and teaching in areas including but not limited to Digital Humanities, Public History, Digital History, data driven Journalism, Digital Social Science, and Digital Art History. The event was organized by Thomas Padilla, and I thank him for inviting me. It was a great event and I was honored to participate.

Continue reading “Data for Curators: OPenn and Bibliotheca Philadelphiensis as Use Cases”Ceci n’est pas un manuscrit: Summary of Mellon Seminar, February 19th 2018

This post is a summary of a Mellon Seminar I presented at the Price Lab for Digital Humanities at the University of Pennsylvania on February 19th, 2018. I will be presenting an expanded version of this talk at the Rare Book School in Philadelphia, PA, on June 12th, 2018

Continue reading “Ceci n’est pas un manuscrit: Summary of Mellon Seminar, February 19th 2018”Slides from OPenn Demo at the American Historical Association Meeting

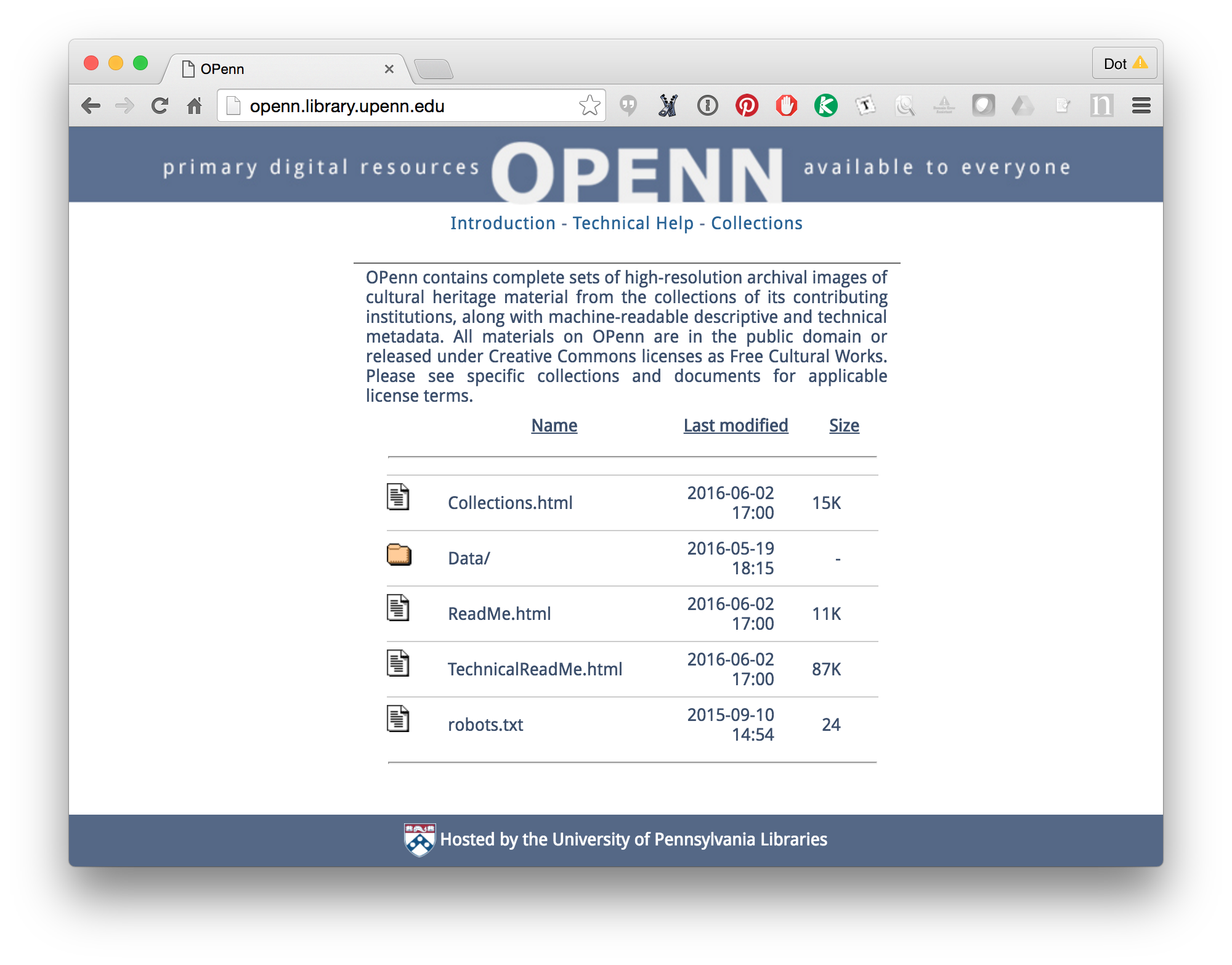

This week I participated in a workshop organized by the Collections as Data project at the annual meeting of the American Historical Association in Washington, DC. The session was organized by Stewart Varner and Laurie Allen, who introduced the session, and the other participants were Clifford Anderson and Alex Galarza.

Continue reading “Slides from OPenn Demo at the American Historical Association Meeting”The Historiography of Medieval Manuscripts in England (and the USA)

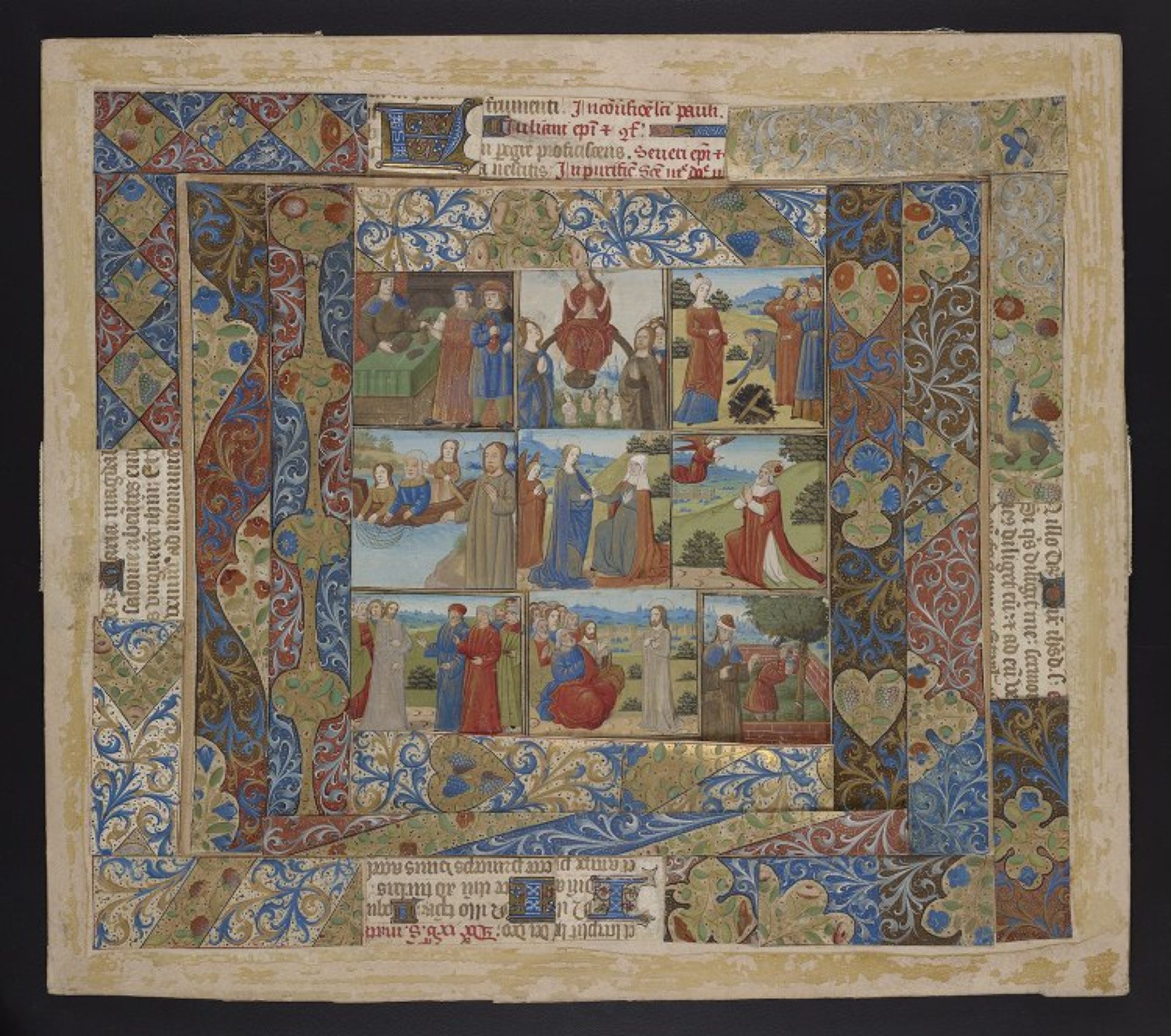

The text of a lightning talk originally presented at The Futures of Medieval Historiography, a conference at the University of Pennsylvania organized by Jackie Burek and Emily Steiner. Keep in mind that this was very lightly researched; please be kind.

Continue reading “The Historiography of Medieval Manuscripts in England (and the USA)”“Freely available online”: What I really want to know about your new digital manuscript collection

So you’ve just digitized medieval manuscripts from your collection and you’re putting them online. Congratulations! That’s great.

Continue reading ““Freely available online”: What I really want to know about your new digital manuscript collection”“What is an edition anyway?” My Keynote for the Digital Scholarly Editions as Interfaces conference, University of Graz

This week I presented this talk as the opening keynote for the Digital Scholarly Editions as Interfaces conference at the University of Graz. The conference is hosted by the Centre for Information Modelling, Graz University, the programme chair is Georg Vogeler, Professor of Digital Humanities and the program is endorsed by Dixit – Scholarly Editions Initial Training Network. Thanks so much to Georg for inviting me! And thanks to the audience for the discussion after. I can’t wait for the rest of the conference.

Continue reading ““What is an edition anyway?” My Keynote for the Digital Scholarly Editions as Interfaces conference, University of Graz”UPenn’s Schoenberg Manuscripts, now in PDF

Hi everyone! It’s been almost a year since my last blog post (in which I promised to post more frequently, haha) so I guess it’s time for another one. I actually have something pretty interesting to report!

Continue reading “UPenn’s Schoenberg Manuscripts, now in PDF”