This is a version of a paper I presented at the University of Kansas Digital Humanities Seminar, Co-Sponsored with the Hall Center for the Humanities on September 17, 2018.

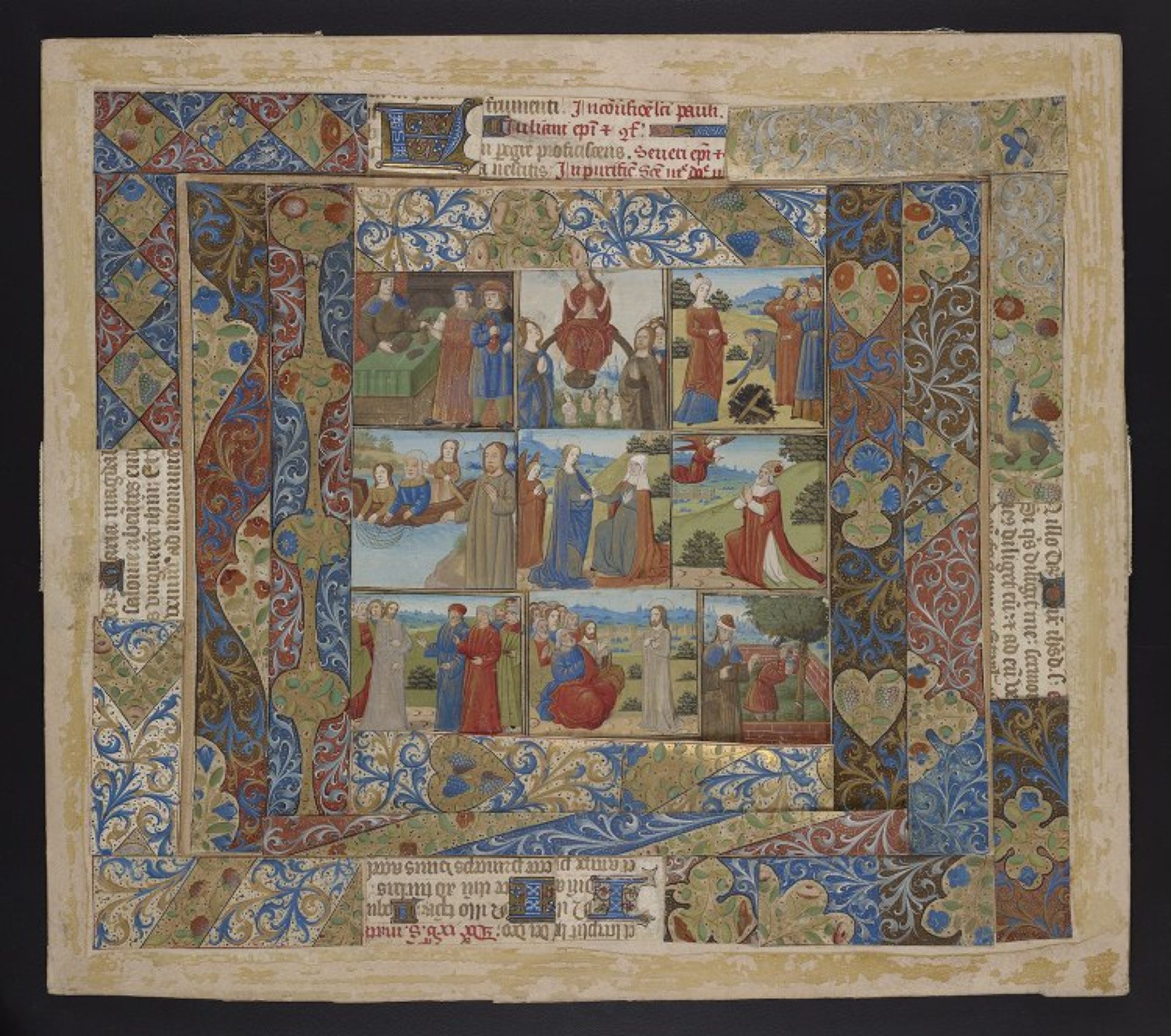

Continue reading “The Uncanny Valley and the Ghost in the Machine: a discussion of analogies for thinking about digitized medieval manuscripts”

Development in production