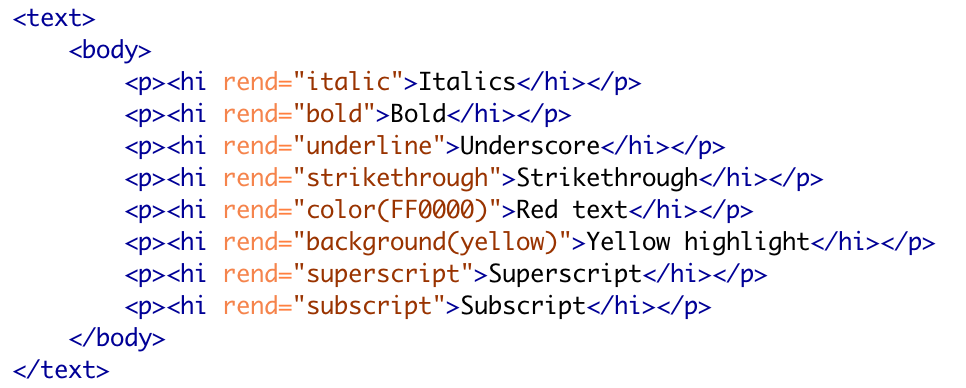

For the past couple of years I’ve been refining a workflow to convert MS Word files into publishable TEI. By “publishable” I mean TEI that can be loaded into some existing publication system (something like TEI Publisher, Edition Visualization Technology (EVT), or TEI Boilerplate), or that you could process yourself in some other way.

Continue reading “Workflow: MS Word to TEI”Using VisColl to Visualize Parker on the Web: Reports on an experiment

This is the full text of a talk I presented at the Parker on the Web 2.0 Symposium in Cambridge on March 16, 2018 (Please note addendum at the end which addresses an issue that came up in discussion later in the day.)

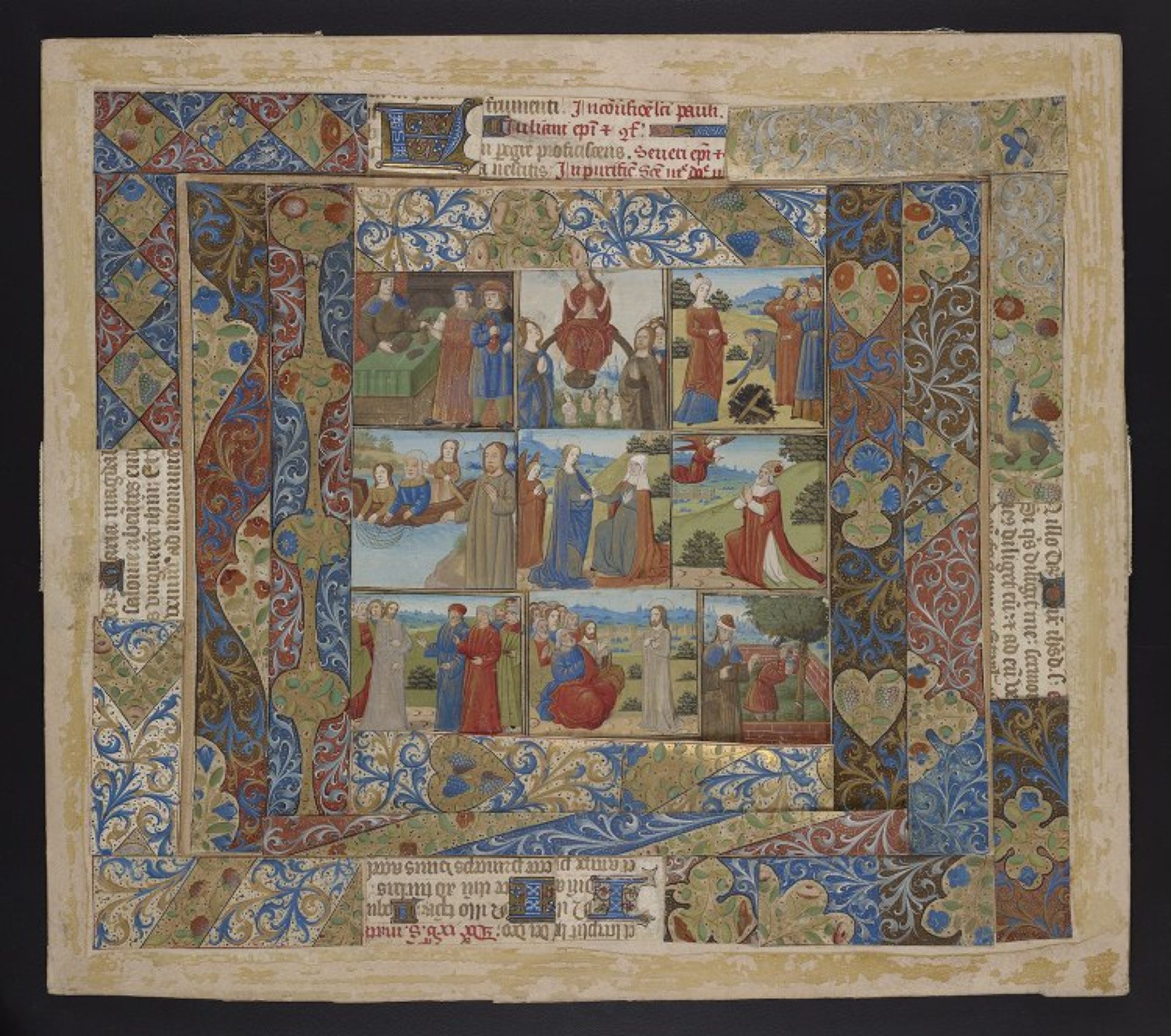

Continue reading “Using VisColl to Visualize Parker on the Web: Reports on an experiment”Ceci n’est pas un manuscrit: Summary of Mellon Seminar, February 19th 2018

This post is a summary of a Mellon Seminar I presented at the Price Lab for Digital Humanities at the University of Pennsylvania on February 19th, 2018. I will be presenting an expanded version of this talk at the Rare Book School in Philadelphia, PA, on June 12th, 2018

Continue reading “Ceci n’est pas un manuscrit: Summary of Mellon Seminar, February 19th 2018”